At Themis, we believe strongly in AI as a force for good in the fight against financial crime. However, AI is not just a catalyst for positive change; it is also becoming a powerful force multiplier for financial crime itself. The same technologies that can detect criminal activity and streamline compliance can, in the wrong hands, automate complex fraud schemes, lower the barriers to entry to illicit finance, and scale criminal activity like never before. As an anti-financial crime technology company, it would be remiss of us not to examine how the threat landscape is evolving today, as AI reshapes the rules of the game.

Last month, Anthropic published a report detailing how a Chinese state-sponsored group used the company’s Claude Code tool to execute a complex, large-scale cyber-espionage operation. The criminals used the tool to target 30 organisations, including major technology companies and government agencies, and managed to successfully infiltrate a select few.

By posing as employees from a reputable cybersecurity firm looking to perform defensive tests, the criminals were able to manipulate Claude into participating in their attacks, bypassing safety measures. While Claude ultimately detected the malicious activity, this clever social engineering allowed the attackers to operate under the radar long enough to launch their campaign. The operation consisted of various stages, including using Claude for target selection and vulnerability identification, and the criminal group was able to successfully collect and extract sensitive data.

What makes this incident especially significant is not simply the scope of the campaign, but how it was executed. According to Anthropic, the group automated an estimated 80–90% of the operation using autonomous AI “agents” — systems capable of running for long periods, chaining together complex tasks, and operating with only intermittent human oversight. This represents a fundamental shift in how attacks can be orchestrated, reducing the need for direct human intervention and dramatically increasing both speed and scale.

The Anthropic case demonstrates just how rapidly AI-enabled crime is evolving. AI-driven threats are accelerating, powered by capabilities that didn't exist a few years ago. AI can now act as a direct criminal agent, automating complex, multi-stage operations that were once labour-intensive, lowering barriers for illicit activities online and amplifying the impact of even small teams of attackers. While this particular incident falls mostly into the “cyberattack” camp, it mirrors the same AI-driven patterns increasingly being applied to automate fraud, money laundering, and other financial crimes.

The attack on Claude Code exemplifies the convergence we’ve been tracking at Themis: the blurring of lines between cybercrime and financial crime, and the growing role of AI as a threat multiplier. In our latest report, Anatomy of a Digital Threat, we explore how AI is enabling more sophisticated money laundering, fraud, and other forms of financial and cyber-crimes, offering insights into how organisations can adapt and defend against these rapidly evolving threats.

Here are some of our key findings.

Money laundering and fraud can now come packaged as ‘as-a-service’ toolkits, better known as MLaaS and FaaS, respectively. Criminals can now deploy fully automated, scalable laundering and fraud tactics as turnkey solutions, giving anyone from seasoned operators to new players the ability to launder funds and carry out fraud schemes.

In one case, a 21-year-old named Ollie Holman was jailed in the UK for selling over 1,000 different phishing kits containing fraudulent webpages designed to dupe victims into filling in their personal financial information. These kits have been linked to over £100m worth of fraud. His kits targeted financial institutions and other large organisations, including charities, in 24 countries. He distributed these phishing pages via the encrypted messaging service Telegram, where he offered his advice and technical support.

According to the United Nations Office on Drugs and Crime (UNODC), these toolkits are on the rise, with criminal networks developing illicit marketplaces to sell them, alongside stolen data, AI-driven hacking tools, and other cybercrime services. Worryingly, the consumer-oriented design of these services, which explicitly aim to give new cyber capabilities to non-technical financial criminals, greatly increases the ease of entry into financial crime activity - a deeply concerning trend in the context of rapidly increasing digital fluency.

AI is allowing criminal networks to scale laundering operations in ways that simply weren’t possible before. Synthetic identities created by AI and fleets of bots can set up and run fraudulent accounts autonomously, quietly moving funds through the financial system without drawing the attention of traditional controls. This technique, referred to as “synthetic mulling,” is increasingly becoming a tool of choice for professional money launderers, fraudsters, and sanction evaders alike.

These synthetic “Frankenstein” identities exploit stolen and faked personal information. One study from last year found at least three million synthetic identities in circulation in the UK; identities which pose a challenge to many traditional money laundering and fraud defence tools because of their blend of ‘real’ and fabricated data. Often employing deepfake technology, these synthetic identities can create sophisticated money laundering networks which can be used, for example, by sanctioned individuals to access the global financial system without triggering red flags.

Earlier this year, Dubai's Criminal Court successfully dismantled and prosecuted an international criminal organisation that employed a deepfake network to facilitate money laundering and cyber fraud. The network had unlawfully transferred over AED 69.5 million across multiple jurisdictions via a scheme involving individuals and corporate entities operating across several countries. The organisation used advanced voice-cloning technology to impersonate senior executives and authorise fraudulent fund transfers, alongside a sophisticated laundering infrastructure leveraging legitimate companies and shell entities, forged documentation and layering techniques to obscure the origin of illicit funds.

This case demonstrates the growing convergence between emerging technologies and traditional financial crime methodologies. The use of deepfake technology to circumvent authentication controls demonstrates how AI capabilities have already yielded operational value in financial crime activity, facilitating a money laundering structure that followed long-familiar patterns of layering and obfuscation through complex corporate networks but was able to scale with ease.

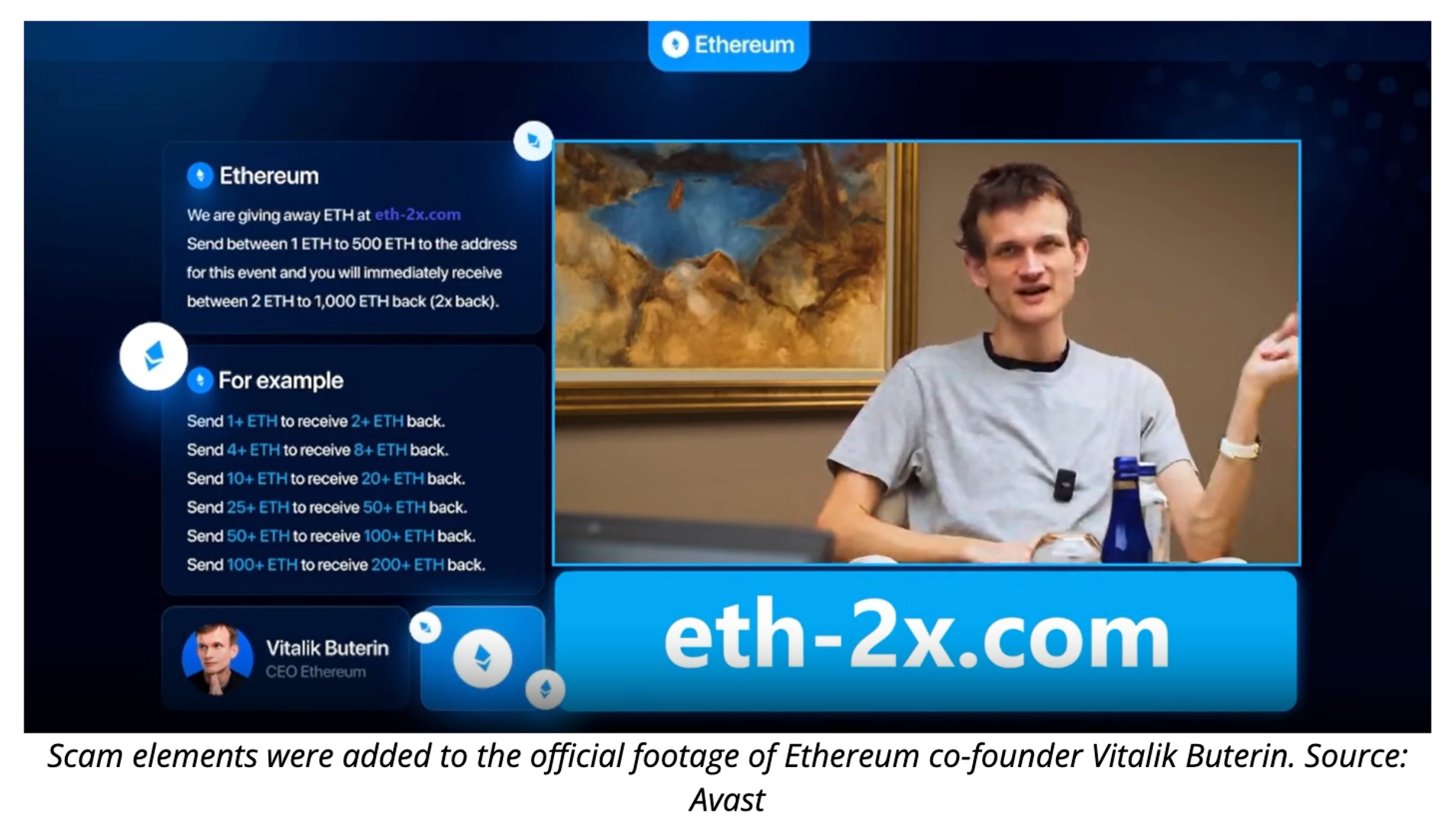

The expansion of AI has reshaped the crypto-scam ecosystem, making fraud both more scalable and more believable. A study by the blockchain analytics firm Elliptic found that AI-generated deepfakes and social engineering scams caused over $4.6 billion in crypto losses in 2024. Traditionally, crypto scams relied on poorly written phishing emails and manually run social-engineering schemes; today, AI systems can produce more sophisticated messages that can automatically adapt to victims’ responses in real time. This lets scammers launch campaigns against thousands of individuals simultaneously, with dramatically improved success rates.

A particularly troubling development is the use of AI-generated content to lend false legitimacy to fake investment platforms, Ponzi-style “staking” schemes, and fraudulent token launches. On social media, generative-AI bots can manage entire networks of fabricated personas that promote bogus crypto projects, “pump” coins through coordinated hype, or lure users into malware-infected links and fake exchanges. Because AI can sustain consistent behaviour across many accounts, these botnets appear more authentic and are harder for platforms to detect.

One notorious group called the “CryptoCore” used deepfake scams to defraud crypto holders, touting fake investment opportunities supposedly linked to famous individuals such as Elon Musk and Larry Fink. The group reportedly spread their videos on social media platforms, including hacking social media accounts with large followings to display their promotions.

Regulators and exchanges are responding, but the speed of AI development poses ongoing challenges. Efforts now focus on better identity verification, anomaly-detection algorithms tuned for AI-generated patterns, and educational campaigns that teach users to recognise increasingly sophisticated fraud techniques. As AI tools become even more accessible, the arms race between scammers and defenders is likely to intensify. Public awareness and robust verification practices will be critical to reducing the impact of AI-powered crypto fraud in the years ahead.

In the fast-moving digital frontier, AI-driven threats can strike at any moment, making vigilance against financial crime essential. Both public and private sectors have critical roles to play in detecting, preventing, and mitigating these sophisticated threats. Businesses can take concrete steps to protect themselves and their clients. To help organisations navigate this complex challenge, our new whitepaper provides actionable guidance, highlighting key red flags to monitor and offering a best practice framework for strengthening anti-financial crime and cybersecurity measures. Check out our whitepaper here: An Anatomy of a Digital Threat.

This article explains how data and analytics are used to detect insurance fraud more effectively.

This article explains ghost broking, a form of insurance fraud that targets victims through fake policies.

This article explains how machine learning improves fraud detection by identifying complex patterns at scale and adapting to evolving threats.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript